FTC Investigates OpenAI's ChatGPT: What It Means For AI Regulation

Table of Contents

The FTC's Concerns Regarding ChatGPT and OpenAI

The FTC's investigation into OpenAI and ChatGPT centers around several key areas of concern. The commission is reportedly examining potential violations related to data privacy, the potential for misinformation, and the generation of biased or discriminatory outputs. These concerns reflect a growing awareness of the potential risks associated with large language models (LLMs) and generative AI.

Specifically, the FTC's alleged concerns include:

- Insufficient data security measures: Concerns exist about the security protocols surrounding the vast datasets used to train ChatGPT and the potential for data breaches or unauthorized access.

- Lack of transparency regarding data collection and usage: Questions have been raised about the transparency of OpenAI's data collection practices and the extent to which user data is used to train and improve the model. This directly relates to data privacy concerns under laws like CCPA and GDPR.

- Potential for generating biased or discriminatory content: ChatGPT, like many AI models, has been shown to generate outputs reflecting biases present in the training data. This raises concerns about potential discrimination and unfair outcomes.

- Risk of spreading misinformation and disinformation: The ability of ChatGPT to generate convincing but false information raises serious concerns about its potential for misuse in spreading misinformation and propaganda. This is especially critical in the current information landscape.

These concerns are supported by various news reports and, while the FTC hasn't released a detailed public statement outlining the specifics of their investigation, the implications are far-reaching. [Insert links to relevant news articles and FTC statements here].

Implications for AI Development and Innovation

The FTC's investigation into OpenAI carries significant implications for the broader AI industry. It could create a "chilling effect," potentially slowing down the pace of AI development as companies become more cautious about pushing technological boundaries. This increased scrutiny may lead to:

- Slower pace of AI development due to stricter regulations: The need for greater compliance and rigorous testing could significantly increase development timelines and costs.

- Increased costs associated with compliance: Companies will need to invest heavily in ensuring data privacy, bias mitigation, and other regulatory compliance measures.

- Focus shift towards responsible AI development practices: The investigation could spur a positive shift towards more ethical and responsible AI development practices across the board.

- Potential for increased legal challenges and lawsuits: The investigation sets a precedent, potentially leading to more legal challenges and lawsuits against AI companies for data privacy violations or harmful AI outputs.

The investigation extends beyond OpenAI, signaling that other AI companies developing and deploying similar technologies will likely face increased scrutiny. This could lead to a more cautious and regulated approach to AI innovation, impacting the speed and scope of future advancements in artificial intelligence.

The Need for Clear AI Regulations and Ethical Guidelines

The FTC's investigation underscores the urgent need for clear and comprehensive regulations governing the development and deployment of AI, particularly large language models like ChatGPT. The ethical considerations surrounding AI are complex and multifaceted, requiring robust regulatory frameworks to address them effectively. Essential aspects of such regulations should include:

- Data privacy protection: Strong safeguards are crucial to protect user data from misuse and unauthorized access.

- Algorithmic transparency: Greater transparency is needed regarding how AI algorithms work and the data they use to make decisions.

- Accountability for AI-generated content: Clear lines of accountability are needed to address harm caused by AI-generated content, including misinformation and biased outputs.

- Bias mitigation strategies: Regulations should mandate the implementation of robust bias mitigation strategies to ensure fairness and equity in AI systems.

- Mechanisms for redress in case of harm caused by AI: Effective mechanisms are needed for individuals and organizations to seek redress in cases where they have suffered harm due to AI systems.

These regulatory aspects need to be developed and implemented responsibly to balance innovation with protection against potential harms.

The Future of AI Regulation in Light of the OpenAI Investigation

The FTC's investigation into OpenAI is likely to profoundly shape the future of AI regulation. Several potential scenarios may unfold:

- Increased government oversight of AI companies: We could see increased government regulation and oversight of AI companies, potentially including stricter licensing requirements and compliance audits.

- Development of industry self-regulatory bodies: The industry may proactively develop self-regulatory bodies to establish ethical standards and best practices for AI development.

- International agreements on AI ethics and standards: International cooperation will likely become more critical in establishing global standards and ethical guidelines for AI.

- The establishment of new regulatory agencies focused on AI: New specialized regulatory agencies focused on AI could be created to oversee the development and deployment of AI technologies.

International collaboration will be crucial to ensure consistent and effective AI regulation, given the global nature of AI development and deployment.

Conclusion: Understanding the FTC's Investigation of OpenAI's ChatGPT and its Impact on AI Regulation

The FTC's investigation into OpenAI's ChatGPT highlights the significant concerns surrounding the responsible development and deployment of advanced AI technologies like large language models. The investigation's implications are far-reaching, potentially impacting the pace of AI innovation, prompting a greater focus on ethical considerations, and necessitating clear and robust regulatory frameworks. The need for addressing data privacy, bias mitigation, and accountability for AI-generated content is paramount. The future of AI regulation will likely involve increased government oversight, industry self-regulation, and international cooperation.

Stay informed about the evolving landscape of AI regulation following the FTC's investigation into OpenAI's ChatGPT. Learn more about responsible AI development and participate in the conversation shaping the future of artificial intelligence. Understanding and addressing the ethical challenges surrounding AI regulation, including generative AI and LLMs, is crucial for ensuring a future where AI benefits all of humanity.

Featured Posts

-

T Mobile To Pay 16 Million For Data Security Failures Over Three Years

Apr 24, 2025

T Mobile To Pay 16 Million For Data Security Failures Over Three Years

Apr 24, 2025 -

Us Stock Futures Surge Trumps Powell Comments Boost Markets

Apr 24, 2025

Us Stock Futures Surge Trumps Powell Comments Boost Markets

Apr 24, 2025 -

Cassidy Hutchinson Key Witness To January 6th Announces Memoir

Apr 24, 2025

Cassidy Hutchinson Key Witness To January 6th Announces Memoir

Apr 24, 2025 -

Reduced Funding And Increased Tornado Risk A Critical Examination Of The Trump Era

Apr 24, 2025

Reduced Funding And Increased Tornado Risk A Critical Examination Of The Trump Era

Apr 24, 2025 -

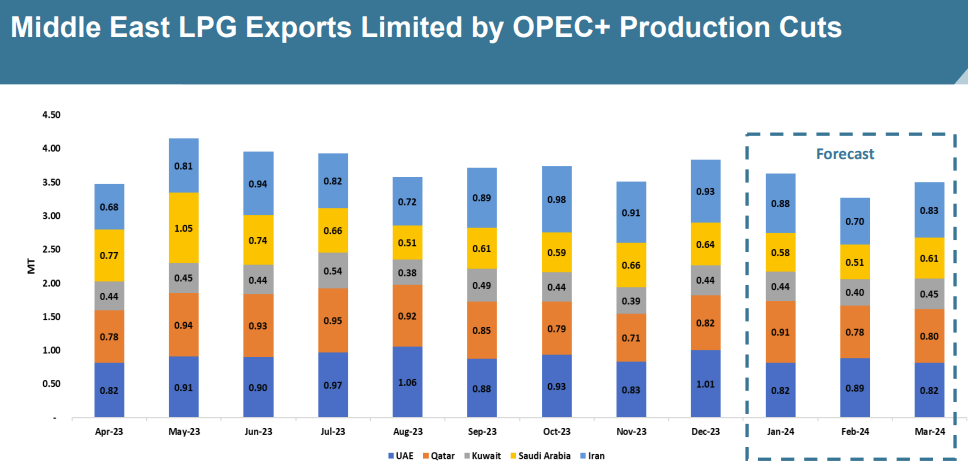

Impact Of Us Tariffs Chinas Turn To Middle East For Lpg Supply

Apr 24, 2025

Impact Of Us Tariffs Chinas Turn To Middle East For Lpg Supply

Apr 24, 2025